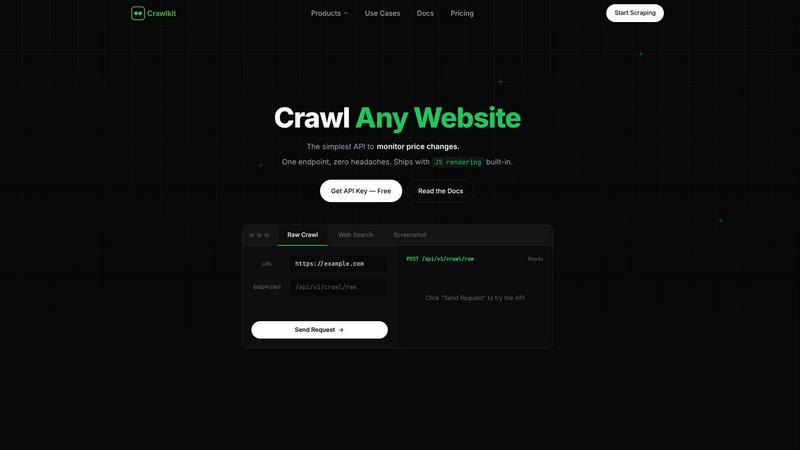

Crawlkit

CrawlKit empowers developers to effortlessly scrape any website for data, insights, and real-time monitoring with ease.

Visit

About Crawlkit

CrawlKit is a powerful web data extraction platform meticulously crafted for developers and data teams who require seamless, reliable, and scalable access to web data. In an era where modern web scraping can often feel like a daunting maze filled with rotating proxies, headless browsers, and anti-bot protections, CrawlKit emerges as the guiding light. It alleviates the complexities associated with gathering web data by offering a simple yet robust API that effectively handles everything from proxy rotation to browser rendering. By allowing users to focus on the valuable insights derived from the data rather than the cumbersome process of collecting it, CrawlKit transforms the way businesses approach data extraction. With the ability to extract various types of web data—from raw HTML to real-time price monitoring—CrawlKit stands as a versatile tool for anyone looking to harness the power of web data effortlessly.

Features of Crawlkit

Comprehensive Data Extraction

CrawlKit simplifies the data extraction process by enabling users to fetch raw HTML from any URL along with complete headers and cookies. This feature ensures that developers receive a complete snapshot of web pages, making it easy to analyze and utilize the data for various purposes.

Web Search API

With CrawlKit's Web Search API, users can programmatically search the web and receive structured JSON results. This capability allows teams to integrate powerful search functionalities into their applications without the hassle of managing complex scraping logic.

Real-Time Change Monitoring

The platform offers an automated change monitoring feature that tracks price changes, stock levels, or content updates in real time. This is particularly valuable for businesses that need to stay competitive by responding quickly to market fluctuations or changes in product availability.

Screenshot Capture

CrawlKit enables users to take full-page screenshots of any URL, outputting them as either PNG or PDF files. This feature is perfect for documentation, presentations, or simply keeping visual records of web pages at specific points in time.

Use Cases of Crawlkit

E-commerce Price Tracking

For e-commerce businesses, staying ahead of competitors is crucial. CrawlKit allows these companies to monitor price changes across their competitors' websites in real time, ensuring they can make informed pricing decisions and promotions.

Market Research

Market researchers can leverage CrawlKit to extract critical data from various sources, allowing them to generate insights about trends, consumer behavior, and competitive analysis, all without the overhead of creating and maintaining their own scraping infrastructure.

Content Aggregation

Content creators and marketers can utilize CrawlKit to gather data from multiple websites, enabling them to curate content from different sources seamlessly. This helps in building comprehensive reports or keeping content fresh and relevant.

Lead Generation from LinkedIn

Companies looking to generate leads can use CrawlKit to extract professional data from LinkedIn. This functionality allows businesses to identify potential clients or partners based on specific criteria, enhancing their outreach strategies.

Frequently Asked Questions

How reliable is CrawlKit for web scraping?

CrawlKit boasts an industry-leading success rate of 98%, ensuring consistent access to data even when websites update their protection measures. This reliability makes it a preferred choice for developers.

What types of data can I extract with CrawlKit?

With CrawlKit, users can extract various types of data, including raw HTML content, search results, visual snapshots, and professional data from platforms like LinkedIn. This versatility caters to diverse data needs.

Is there a limit to the number of API calls I can make?

CrawlKit offers unlimited API calls across all endpoints, allowing users to scale their data extraction efforts without worrying about hitting limits or incurring additional fees.

Do I need to manage proxies when using CrawlKit?

No, CrawlKit handles proxy rotation automatically, allowing users to focus on utilizing the data rather than managing the complexities of proxy servers and anti-bot protections. This makes the data extraction process much simpler and more efficient.