Fallom

Fallom guides your AI agents from first prompt to perfect performance with complete observability.

Visit

About Fallom

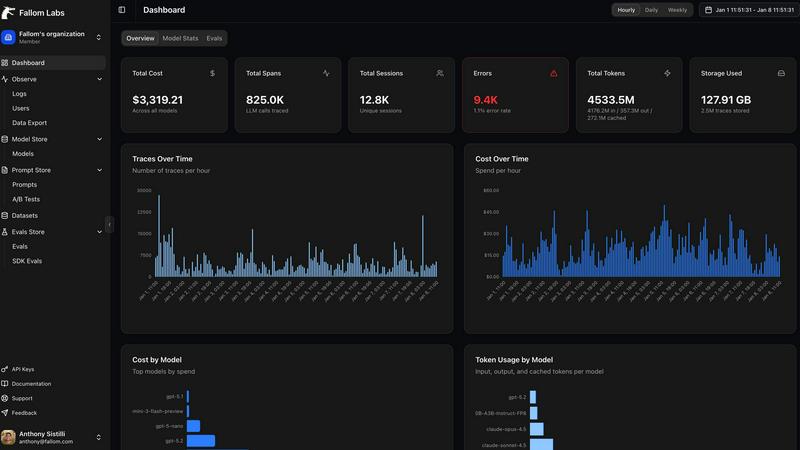

Imagine deploying a sophisticated AI agent to handle customer inquiries, only to be left in the dark when a user reports a strange or unhelpful response. You're flying blind, with no way to replay the exact conversation, see which tools the agent called, or understand why it took so long to reply. This is the black box problem of production AI, and it's where the journey with Fallom begins. Fallom is the AI-native observability platform built to illuminate every aspect of your LLM and agent workloads. It's designed for engineering teams who are moving beyond prototypes and need to operate their AI applications with the same confidence, reliability, and insight as any other critical software system. With a single OpenTelemetry-native SDK, you can instrument your application in minutes, transforming opaque AI calls into fully transparent, traceable operations. Fallom's core value proposition is complete visibility: see every prompt, every model output, every tool call, and every cost in real-time. It groups these interactions by session and user for full context, provides timing waterfalls to debug complex, multi-step agents, and delivers enterprise-ready audit trails for compliance. Your journey from uncertainty to mastery over your AI operations starts here.

Features of Fallom

End-to-End LLM Tracing

Fallom provides a complete, real-time trace for every LLM interaction in your production environment. This isn't just logging; it's a detailed replay that captures the full narrative of each call. You see the exact prompt sent, the model's raw output, the number of tokens consumed, the latency down to the millisecond, and the precise per-call cost. This granular visibility is the foundation for debugging issues, optimizing performance, and understanding exactly what is happening inside your AI-powered features at any given moment.

Enterprise Compliance & Audit Trails

For teams operating in regulated industries or with strict internal governance, Fallom builds observability with compliance as a first-class citizen. The platform maintains immutable, complete audit trails of every LLM interaction to support requirements like the EU AI Act, SOC 2, and GDPR. Features include detailed input/output logging, model version tracking for reproducibility, and user consent tracking. This ensures you have a verifiable record of your AI's behavior and decisions, turning a potential compliance headache into a managed, transparent process.

Cost Attribution & Spend Transparency

AI model costs can spiral unpredictably without proper oversight. Fallom solves this by automatically attributing spend across every dimension that matters to your business. Track costs per model (like GPT-4o vs. Claude), per user, per team, or per customer. The platform provides live dashboards and summaries, such as "This month's spend: $25.62," broken down by provider. This empowers teams to budget accurately, implement chargebacks, and make cost-aware decisions about model selection and usage patterns.

Session & Customer Context

Understanding an isolated LLM call is useful, but understanding the entire user journey is transformative. Fallom groups related traces into sessions, allowing you to see the complete sequence of interactions for a specific user or customer. This context is crucial for diagnosing complex issues, analyzing user behavior, and calculating the true cost-to-serve. You can see that "user_2nXk" made 12 traces costing $0.045, providing a holistic view of AI engagement and expenditure at an individual level.

Use Cases of Fallom

Debugging Complex AI Agent Workflows

When a multi-step agent—one that searches a database, calls an API, and then formats a response—fails or behaves unexpectedly, traditional logging falls short. Engineers use Fallom's timing waterfall visualizations and tool call visibility to replay the agent's entire decision-making process. They can pinpoint exactly which step introduced latency, see the arguments passed to a failed function, and examine the intermediate LLM responses, turning hours of guesswork into minutes of precise diagnosis.

Ensuring Compliance and Audit Readiness

A financial services company deploying an AI investment advisor must adhere to stringent regulatory standards. By implementing Fallom, they automatically generate the required audit trails for every piece of financial advice generated. Compliance officers can verify model versions, track user consent for data processing, and review logged inputs and outputs to demonstrate due diligence and responsible AI governance during an audit.

Managing and Optimizing AI Operational Costs

A SaaS product with a new AI feature sees rapid adoption but fears ballooning, unpredictable API costs. The engineering team integrates Fallom and immediately gains a real-time dashboard showing spend per model and per customer segment. They identify that a small group of power users is driving 80% of the costs using a premium model, enabling them to implement smart usage tiers or optimize prompts to reduce expenses while maintaining performance.

Safe Model Deployment and A/B Testing

Before fully migrating from GPT-4 to the newer Claude-3.5 model, a team uses Fallom to run a live, controlled A/B test. They safely split traffic (e.g., 70/30) between the two models directly within the platform. Fallom then allows them to compare key metrics side-by-side: not just latency and cost, but also custom evaluation scores for accuracy and relevance. This data-driven approach ensures they can roll out the new model with confidence, backed by empirical evidence.

Frequently Asked Questions

How difficult is it to integrate Fallom into my existing application?

Integration is designed to be a swift and straightforward step in your development journey. Fallom provides a single, OpenTelemetry-native SDK that can be added to your codebase in minutes. The platform proudly advertises "OTEL Tracing in Under 5 Minutes." There's no need to rip and replace your existing logging; the SDK seamlessly instruments your LLM calls, sending rich telemetry data to your Fallom dashboard with minimal configuration.

Does Fallom support all major LLM providers?

Yes, Fallom is built on the open standard of OpenTelemetry, which ensures compatibility and prevents vendor lock-in. The platform works with every major provider, including OpenAI (GPT models), Anthropic (Claude), Google (Gemini), and others. This means you can monitor, trace, and cost-attribute all your AI interactions from a single, unified pane of glass, regardless of which model or API you are calling.

How does Fallom handle sensitive or private user data?

Fallom is built for enterprise environments and offers robust privacy controls. You can enable "Privacy Mode," which allows you to maintain full telemetry for tracing and debugging while disabling content capture for sensitive prompts and responses. You can configure content redaction rules, opt for metadata-only logging, and set different privacy levels per environment (e.g., strict in production, verbose in development) to protect user confidentiality.

What kind of performance overhead does the Fallom SDK add?

The SDK is engineered for minimal performance impact in production environments. It operates asynchronously, meaning the tracing and data collection happen in the background without blocking your application's main execution thread. While there is always some overhead with any observability tool, the design prioritizes efficiency to ensure you gain deep insights without introducing significant latency to your end-user experience.

You may also like:

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs