ggml.ai

About ggml.ai

ggml.ai is a cutting-edge tensor library that focuses on enhancing machine learning performance on commodity hardware. Targeted at developers and researchers, it offers key features like integer quantization and automatic differentiation. This innovative platform streamlines model deployment, making on-device inference accessible and efficient.

ggml.ai offers a free-to-use library under the MIT license. While there are no formal pricing plans currently mentioned, users can contribute or sponsor projects. Upgrading enhances functionalities, providing access to community resources and support for advanced machine learning applications.

ggml.ai features a user-friendly interface designed for seamless navigation and efficient access to core functionalities. The layout promotes an intuitive browsing experience, allowing users to easily engage with machine learning tools and projects while accessing unique features that simplify the coding process.

How ggml.ai works

Users interact with ggml.ai by first visiting the website and accessing the available resources. They can download the library and start integrating it into their projects. The platform provides documentation to ease onboarding, helping users navigate features like automatic differentiation and model optimization for high performance.

Key Features for ggml.ai

Integer quantization support

Integer quantization support is a standout feature of ggml.ai, enabling efficient manipulation of large machine learning models on commodity hardware. This capability significantly reduces the memory footprint while preserving high-performance metrics, making ggml.ai an invaluable resource for developers focused on optimization.

Automatic differentiation

Automatic differentiation is a key feature of ggml.ai, facilitating seamless training and optimization of machine learning models. This powerful capability simplifies complex computations, allowing developers to focus on innovation while ensuring accurate and efficient model adjustments. ggml.ai stands out for making this process accessible to all.

Broad hardware support

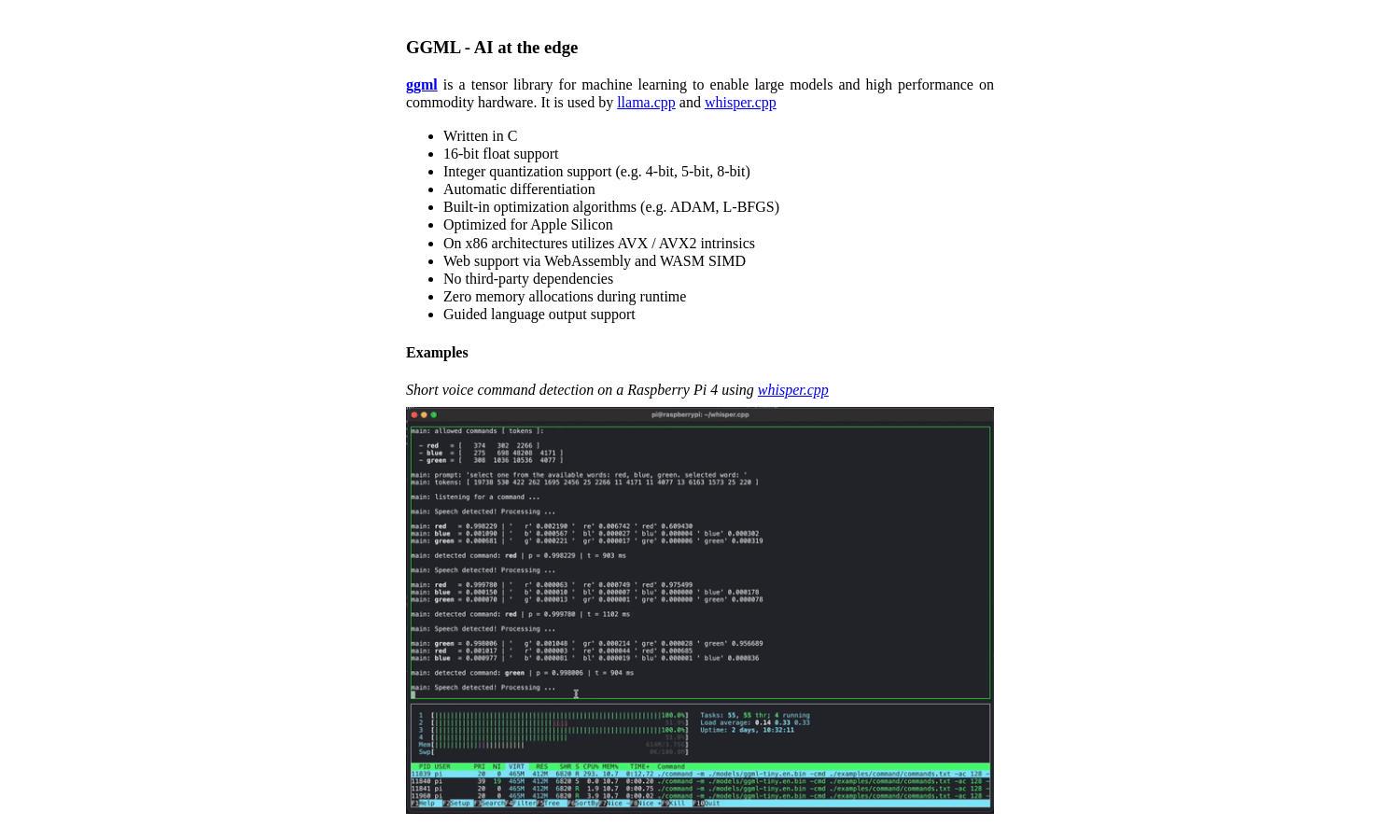

ggml.ai's broad hardware support allows the tensor library to function across various platforms, including Raspberry Pi and desktops. This versatility means users can deploy models on different devices without compatibility issues, enhancing the library's utility for machine learning developers looking for flexibility.

You may also like: